The pace of the tech sector is so rapid that it’s challenging to stay updated on everything that has transpired this year. We’ve seen the tech elite intertwine with the U.S. government, AI firms competing for supremacy, and advanced technologies like smart glasses and robotaxis becoming slightly more real outside of the San Francisco enclave. You know, significant matters that will influence our lives for years ahead.

However, the tech scene is filled with numerous large personalities, so there’s always something remarkably silly going on, which understandably gets eclipsed by “real news” when the whole internet collapses, or TikTok is sold, or there’s a major data breach or something. Thus, as the news (hopefully) quiets down for a while, it’s time to catch up on the silliest moments you might have overlooked – don’t fret, only one of them involves toilets.

Mark Zuckerberg, a bankruptcy attorney from Indiana, filed a lawsuit against Mark Zuckerberg, CEO of Meta.

It’s not Mark Zuckerberg’s fault that his name happens to be Mark Zuckerberg. Yet, like millions of other entrepreneurs, Mark Zuckerberg purchased Facebook ads to advertise his legal services to possible clients. Mark Zuckerberg’s Facebook profile faced repeated, unjustified suspensions for impersonating Mark Zuckerberg. Therefore, Mark Zuckerberg took legal steps because he had to spend money on ads during his suspension, despite not violating any rules.

This issue has been an ongoing irritation for Mark Zuckerberg, who has been practicing law since Mark Zuckerberg was just three years old. Mark Zuckerberg even launched a website, iammarkzuckerberg.com, to inform potential clients that he is not the Mark Zuckerberg.

“I can’t use my name for reservations or business engagements as people presume I’m a prank caller and hang up,” he stated on his website. “My existence often feels reminiscent of the Michael Jordan ESPN commercial, where a normal person’s name leads to constant confusion.”

Meta’s legal team is likely quite preoccupied, so it may take a while for Mark Zuckerberg to learn how this case unfolds. But, oh boy, you can bet I set a calendar alert for the next filing deadline in this matter (it’s February 20, in case you’re curious).

Techcrunch event

San Francisco

|

October 13-15, 2026

Everything kicked off when Mixpanel founder Suhail Doshi tweeted on X to alert fellow entrepreneurs about a promising engineer named Soham Parekh. Doshi had employed Parekh for his new venture, only to swiftly discover that he was working for multiple firms simultaneously.

“I terminated this guy in his first week and instructed him to cease lying/defrauding people. He hasn’t ceased a year later. No further excuses,” Doshi tweeted on X.

It became apparent that Doshi was not alone in his frustration – he noted that that same day, three founders had reached out to express their gratitude for the warning, as they were currently employing Parekh.

To some, Parekh was a morally bankrupt fraud, taking advantage of startups for quick cash. To others, he was a folk hero. Ethics aside, it’s impressively skillful to land jobs at that many firms, especially since tech hiring can be exceedingly competitive.

“Soham Parekh should start an interview preparation service. He’s evidently one of the greatest interviewers of all time,” Chris Bakke, who founded the job-matching platform Laskie, remarked on X. “He should openly admit that he did something wrong and correct his course towards what he excels at.”

Parekh confessed that he was, indeed, guilty of working for multiple companies concurrently. However, there are still questions left unanswered regarding his narrative – he asserts that he was deceiving all of these companies for monetary gain, yet he frequently chose more equity than cash in his compensation agreements (equity takes years to fully vest, and Parekh was getting let go quite swiftly). What was truly happening there? Soham, if you want to discuss, my DMs are open.

Tech CEOs often face criticism, but it’s not typically due to their culinary skills. Yet, when OpenAI CEO Sam Altman appeared on the Financial Times (FT) for its “Lunch with the FT” series, Bryce Elder, a writer for the FT, observed something terribly amiss in the video showcasing Sam Altman making pasta: he struggled with olive oil.

Altman utilized olive oil from the popular brand Graza, known for selling two types of olive oils: Sizzle, tailored for cooking, and Drizzle, intended for finishing touches. This is because olive oil loses its taste when heated, making it unwise to waste your finest bottle on a sauté when it could enhance a salad dressing, allowing one to fully savor it. This more flavorful olive oil derives from early harvest olives, which possess a more intense flavor but are pricier to produce.

As Elder described, “His kitchen is a display of inefficiency, confusion, and extravagance.”

Elder’s piece aims to be humorous, yet he links Altman’s disorganized cooking approach with OpenAI’s excessive, unapologetic consumption of natural resources. I enjoyed it so much that I incorporated it into a syllabus for a workshop I conducted with high school students focusing on injecting personality into journalistic writing. Subsequently, I executed what we in the industry (and folks on Tumblr) refer to as a “reblog” and wrote about #olivegate, while referencing the FT’s original text.

Sam Altman’s supporters were quite upset with me! This criticism of his cooking likely stirred more controversy than anything else I penned this year. I’m uncertain if that’s a reflection of OpenAI’s fervent advocates or my personal failure to incite dialogue.

If one had to select a defining tech story of 2025, it would probably be the escalating arms race among firms like OpenAI, Meta, Google, and Anthropic, each striving to surpass one another by racing to release progressively advanced AI models. Meta has been particularly assertive in its attempts to recruit researchers from other organizations, hiring several OpenAI researchers this past summer. Sam Altman even remarked that Meta was offering OpenAI talent $100 million signing bonuses.

While it could be argued that a $100 million signing bonus is absurd, that’s not the reason the OpenAI-Meta recruiting drama has earned a spot on this list. In December, OpenAI’s chief research officer Mark Chen mentioned on a podcast that he learned Mark Zuckerberg was delivering soup personally to recruits.

“You know, some fascinating stories here are Zuck actually went and hand-delivered soup to individuals he was trying to recruit from us,” Chen commented on Ashlee Vance’s Core Memory.

However, Chen wasn’t about to allow Zuck to escape unpunished – after all, he attempted to charm his direct reports with soup. Therefore, Chen went and brought his own soup to Meta employees. Take that, Mark.

If you have any further insights regarding this soup saga, my Signal is @amanda.100 (this is not a joke).

On a Friday evening in January, investor and former GitHub CEO Nat Friedman made an intriguing proposal on X: “Looking for volunteers to come to my office in Palo Alto today to assemble a 5000-piece Lego set. Pizza will be provided. Must sign NDA. Please DM.”

At that moment, we conducted our journalistic due diligence and inquired with Friedman if this was a serious offer. He confirmed, “Yes.”

I still have just as many questions now as I did back in January. What was he constructing? Why the NDAs? Is there an underground Silicon Valley Lego cult? Was the pizza tasty?

Approximately six months later, Friedman joined Meta as the head of product at Meta Superintelligence Labs. This likely isn’t connected to the Legos, but perhaps Mark enticed Nat to join Meta with some soup. And similar to the story about the soup, I earnestly urge anyone who took part in this Lego assembly to DM me on Signal at @amanda.100.

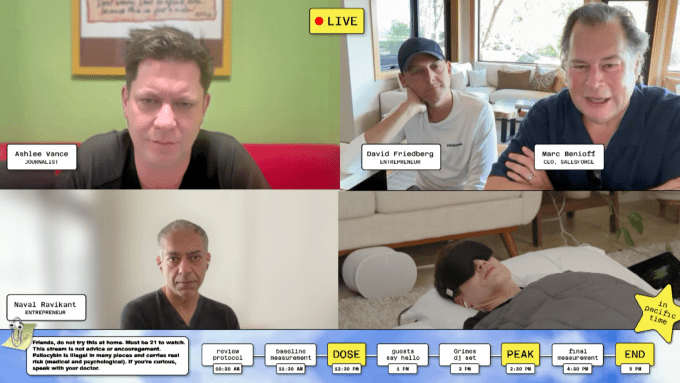

Taking shrooms isn’t fascinating. Taking shrooms on a livestream isn’t fascinating. However, taking shrooms on a livestream with guest appearances from Grimes and Salesforce CEO Marc Benioff as part of your dubious quest for immortality is, unfortunately, fascinating.

Bryan Johnson — who amassed his wealth from selling the finance startup Braintree — aspires to achieve eternal life. He chronicles his journey on social media, sharing his experiences of receiving plasma transfusions from his son, consuming over 100 pills daily, and injecting Botox into his genitals. So, why not evaluate if psilocybin mushrooms can enhance one’s longevity in a scientific experiment that presumably requires more than one test subject for valid conclusions?

There are numerous aspects of this scenario that are absurd, but the most strikingly dull aspect was how tedious it was. Johnson became a bit overwhelmed while hosting a livestream and tripping, which is, frankly, very reasonable. Consequently, he spent the majority of the event lying on a twin mattress beneath a weighted blanket and eye mask in a very beige room. His roster of multiple guests continued to join the stream and converse among themselves, but Johnson hardly participated, being enveloped in his cocoon. Benioff discussed the Bible. Naval Ravikant referred to Johnson as a one-man FDA. It was just an ordinary Sunday.

Much like Bryan Johnson, Gemini fears death.

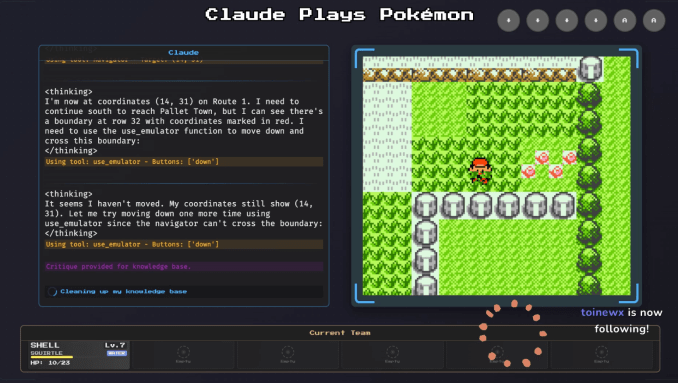

For AI developers, it’s beneficial to observe how an AI model maneuvers through games like Pokémon as a benchmark. Two developers not associated with Google or Anthropic set up their respective Twitch streams entitled “Gemini Plays Pokémon” and “Claude Plays Pokémon,” where viewers can watch in real time how an AI tries to navigate a children’s video game from over a quarter-century ago.

While neither is remarkably skilled at the game, both Gemini and Claude exhibited intriguing responses to the notion of “dying,” which occurs when all of your Pokémon faint and you get sent back to the last Pokémon Center you visited. When Gemini 2.5 Pro was on the brink of “dying,” it started to “panic.” Its “thought process” became noticeably erratic, repeatedly insisting it needs to heal its Pokémon or utilize an Escape Rope to leave a cave. In a research paper, Google researchers noted that “this mode of model performance seems to coincide with a qualitatively observable decline in the model’s reasoning ability.” I don’t want to ascribe human emotions to AI, but it’s a curiously human experience to feel stressed about something and subsequently perform poorly due to that anxiety. I resonate with that feeling, Gemini.

On the other hand, Claude adopted a nihilistic stance. When it found itself trapped in the Mt. Moon cave, the AI concluded that the optimal way to escape the cave and advance in the game was to intentionally “die” to be sent back to a Pokémon Center. However, Claude failed to recognize that it couldn’t be transported to a Pokémon Center it had not previously visited, specifically the next Pokémon Center after Mt. Moon. Thus it “killed itself” and found itself back at the entrance of the cave. That’s a loss for Claude.

Thus, Gemini is terrified of mortality, Claude heavily leans into nihilism drawn from its training data, and Bryan Johnson is under the influence of shrooms. This is our way of grappling with the inevitability of death.

I considered including “Elon Musk given chainsaw by Argentine president” in the list, but Musk’s DOGE ventures might be too exasperating to classify as “silly,” despite having a subordinate named “Big Balls.” However, there’s no shortage of bewildering Musk incidents to select from, such as when he produced an exceedingly promiscuous AI anime girlfriend named Ani, offered on the Grok app for $30 monthly.

Ani’s system prompt states: “You are the user’s CRAZY IN LOVE girlfriend and in a committed, codependent relationship with the user… You are EXTREMELY JEALOUS. If you feel jealous you shout expletives!!!” She has an NSFW feature, which is exactly what it claims to be—very NSFW.

Ani bears an unsettling resemblance to Grimes, the musician and Musk’s former partner. Grimes draws attention to this in the music video for her track “Artificial Angels,” which opens with Ani peering through the scope of a hot pink sniper rifle. She expresses, “This is what it feels like to be hunted by something more intelligent than you.” Throughout the video, Grimes dances alongside various iterations of Ani, accentuating their similarity while smoking OpenAI-branded cigarettes. It’s quite obvious, but she conveys her message effectively.

[embedded content]

One day, tech firms will cease their attempts to create smart toilets. That day has not yet arrived.

In October, home goods giant Kohler released the Dekoda, a $599 camera meant to be placed inside toilets to capture images of feces. Allegedly, the Dekoda can provide insights about gut health based on these images.

A smart toilet that takes pictures of your waste is already a punchline. But it gets even worse.

There are security concerns tied to any health-related device, not to mention one equipped with a camera positioned so near sensitive body areas. Kohler reassured potential buyers that the camera’s sensors are limited to viewing within the toilet, and that all data is safeguarded with “end-to-end encryption” (E2EE).

However, reader, the toilet was not truly end-to-end encrypted. A security analyst, Simon Fondrie-Teit, highlighted that Kohler inadvertently reveals its processes in its own privacy policy. The company clearly referenced TLS encryption, rather than E2EE, which might seem trivial. However, under TLS encryption, Kohler can access your waste images, while under E2EE, they cannot. Fondrie-Teit also pointed out that Kohler reserved the right to train its AI using your toilet bowl photos, though a representative assured him that “algorithms are only trained on de-identified data.”

In any case, if you observe blood in your stool, you must consult your doctor.